So I’ve been pretty open about the fact that I’ve moved from data warehousing in the television and online ad industries to data warehousing in the gaming industry. The problem domains are so incredibly different. In the television and ad industries, there’s a relatively small amount of data that people are actually concerned about. Generally speaking, those industries are most interested in how many people saw something (viewed the ad), how many people interacted with it (clicked on it), and whether they went on to perform some other action (like buying a product).

However, in the gaming industry we’re interested in literally everything that a user does – and not in the creepy way. The primary goals are to monitor and improve user engagement, user enjoyment, and core business KPIs. There are a lot of specific points to focus on and try to gather this information, and right now the industry standard appears to be a highly generalized event/payload system.

When looking at highly successful games like Temple Run (7M DAU [gamesbrief]) it’s only 150 events per user to get a billion events per day. Between user segmentation and calculating different metrics it’s pretty easy to see why you’d have to process parts of the data enough times that you’re processing trillions of events and hundreds of GB of facts per day.

When I see something that looks that outrageous, I tend to ask myself whether that’s really the problem to be solving. The obvious answer is to gather less data but that’s exactly the opposite of what’s really needed. So is there a way that to get the needed answers without processing trillions of events per day? Yes I’d say that there is; but perhaps not with the highly generic uncorrelated event/payload system. Any move in that direction would be moving off into technically uncharted territory – though not wholly uncharted for me. I’ve built a similar system before in another industry, albeit with much simpler data.

If you aren’t familiar at all with data warehousing, a ten thousand foot overview (slightly adapted for use in gaming) would look something like this. First, the gaming client determines what are interesting facts to collect about user behavior and game performance. Then it transmit JSON events back to a server for logging and processing. From there the data is generally batch processed and uploaded to a database* for viewing.

So as a basic sanity check, I’m doing some load testing to determine whether it is feasible to gather and process much higher resolution information about a massively successful game and it’s users than seems to be currently available in the industry. Without going into proprietary details, I’ve manufactured analytics for a fake totalhelldeath game. It marries Temple Run’s peak performance with a complicated economy resembling Eve Online’s.

From there, I’m compressing days of playtime into minutes and expanding the user base to be everyone with a registered credit card in the app store (~400M people as of 2012) [wikipedia]. The goal here is to see how far it’s possible to reasonably push an analytics platform in terms of metrics collection, processing, and reporting. My best estimate for the amount of data to be processed per day in this load test is ~365 GB/day of uncompressed JSON. While there’s still a lot that’s up in the air about this, I can share how dramatically the design requirements differ:

Previously:

- Reporting Platform: Custom reporting layer querying 12TB PostgreSQL reporting databases

- Hardware: Bare metal processing cluster with bare metal databases

- Input Data: ~51GB/day uncompressed binary (~150TB total uncompressed data store)

- Processing throughput: 86.4 billion facts/day across 40 cores (1M facts/sec)

Analytics Load Test:

- Reporting Platform: Reporting databases with generic reporting tool

- Hardware: Amazon Instances

- Input Data: ~365 GB/day uncompressed JSON (~40k per “hell fact” – detailed below)

- Processing throughput: duplication factor * 8.5M facts/game day (100 * duplication facts/sec)

I’ve traditionally worked in a small team on products that have been established for years. I have to admit that it’s a very different experience to be tasked with building literally everything from the ground up – from largely deciding what analytics points are reasonable to collect to building the system to extract and process it all. Furthermore, I don’t have years to put a perfect system into place, and I’m only one guy trying to one up the work of an entire industry. The speed that I can develop at is critical: so maintaining Agile practices [wikipedia], successful iterations [wikipedia], and even the language I choose to develop in is of critical importance.

The primary motivator for my language choice was a combination of how quickly I can crank out high quality code and how well that code will perform. Thus, my earlier blog post [blog] on language performance played a pretty significant role in which languages saw a prototype. Python (and pypy specifically) seems well suited for the job and it’s the direction I’m moving forward with. For now I’m building the simplest thing that could possibly work and hoping that the Pypy JIT will alleviate any immediate performance shortfalls. And while I know that a JIT is basically a black box and you can’t guarantee performance, the problem space showed high suitability to JIT in the prototyping phase. I foresee absolutely no problems handling the analytics for a 1M DAU game with Python – certainly not at the data resolution the industry is currently collecting.

But, I’m always on the look out for obvious performance bottlenecks. That’s why I noticed something peculiar when I was building out some sample data a couple of days ago. On the previous project I worked on, I found that gzipping the output files in memory before writing to disk actually provided a large performance benefit because it wrote 10x less data to disk. This shifted our application from being IO bound to being CPU bound and increased the throughput by several hundred percent. I expected this to be even more true in a system attempting to process ~365GB of JSON per day, so I was quite surprised to find that enabling in-memory gzip cut overall application performance in half. The implication here is that the application is already CPU bound.

It didn’t take much time before I’d narrowed down the primary culprit: json serialization in pypy was just painfully slow. It was a little bit surprising considering this page [pypy.org] cites pypy’s superior json performance over cpython. Pypy is still a net win despite the poor JSON serialization performance, but the win isn’t nearly as big as I’d like it to be. So after a bit of research I found several json libraries to test and had several ideas for how the project was going to fall out from here:

- Use a different json library. Ideally it JITs better than built in and I can just keep going.

- Accept pypy’s slow json serialization as a cost of (much) faster aggregation.

- Accept cpython’s slower aggregation and optimize aggregation with Cython or a C extension later

- Abandon JSON altogether and go with a different object serialization method (protobuf? xdr?)

After some consideration, I ruled out the idea of abandoning JSON altogether. By using JSON, I’m (potentially) able to import individual records at any level into a Mongo cluster and perform ad hoc queries. This is a very non-trivial benefit to just throw away! I looked at trying many JSON libraries, but ultimately settled on these three for various reasons (mostly relating to them working):

- builtin json

- simplejson

- ujson (Incompatible with pypy)

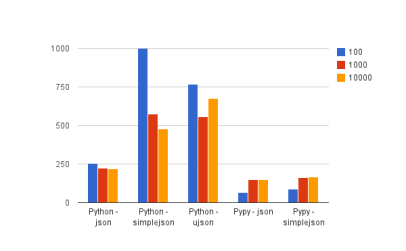

To test each of these libraries, I devised a simple test with the goal of having the modules serialize mock event data. This is important because many benchmarks I’ve seen are built around very small contrived json structures. I came up with the following devious plan in order to make sure that my code couldn’t really muck up the benhmark results:

- create JSON encodable dummy totalhelldeath fact list

- foreach module: dump list to file (module.dump(facts, fp))

- foreach module: read list from file (facts = module.load(fp))

Just so that everything is immediately obvious: this was run on one core of an Amazon XL instance, and the charts are measuring facts serialized per second. That means that bigger bars are better here.

Read Performance

There’s really no obvious stand out winner here, but it’s obvious that the builtin json library is lacking in both cpython and pypy. It obviously runs a bit faster with cpython, but it’s not enough to really write home about. However, simplejson and ujson really show that their performance is worth it. In my not-so-expert opinion, I’d say that ujson walks away with a slight victory here.

There’s really no obvious stand out winner here, but it’s obvious that the builtin json library is lacking in both cpython and pypy. It obviously runs a bit faster with cpython, but it’s not enough to really write home about. However, simplejson and ujson really show that their performance is worth it. In my not-so-expert opinion, I’d say that ujson walks away with a slight victory here.

Write Performance

However, here there is an obvious standout winner. And in fact, the margin of victory is so large that I feel I’d be remiss if I didn’t say I checked file sizes to ensure it was actually serializing what I thought it was! There was a smallish file size difference (~8%), primarily coming from the fact that ujson serializes compact by default.

However, here there is an obvious standout winner. And in fact, the margin of victory is so large that I feel I’d be remiss if I didn’t say I checked file sizes to ensure it was actually serializing what I thought it was! There was a smallish file size difference (~8%), primarily coming from the fact that ujson serializes compact by default.

So now I’m left with a conundrum: ujson performance is mighty swell, and that can directly translate to dollars saved. In this totalhelldeath situation, I could be sacrificing as much as 71k + 44k extra core-seconds per day by choosing Pypy over CPython. In relative money terms, that means it effectively increases the cost of an Amazon XL instance by a third. In absolute terms, it costs somewhere between $5.50 USD/day and $16 USD/day – depending on whether or not it’s necessary to spin up an extra instance or not.

Obviously food for thought. Obviously this load test isn’t going to finish by itself, so I’m putting Python’s (lack of) JSON performance behind me. But the stand out performance from ujson’s write speed does mean that I’m going to be paying a lot closer attention to whether or not I should be pushing towards CPython, Cython, and Numpy instead of Pypy. In the end I may have no choice but to ditch Pypy altogether – something that would make me a sad panda indeed.

Filed under: Data Warehousing, Game Design, Personal Life, Software Development

If using gzip is making your usage scenario CPU bottlenecked, take a look at LZ4, it’s the fastest thing out there atm, and probably a good tradeoff for a somewhat lower compression ratio, python bindings available: http://code.google.com/p/lz4/